Enhancing ChatGPT With Infinite External Memory Using Vector Database and ChatGPT Retrieval Plugin

Allowing GPT to access infinite external memory

GPT is an incredibly powerful tool for natural language processing tasks. However, when it comes to customised tasks, its capacity can be limited by the input token size.

For example, GPT has a limited input size (4,096 for GPT3.5), but you have a very long text (e.g. a book) that you want to ask questions about. While fine-tuning GPT with your private data is a potential solution, it can be a complex and expensive process, requiring high computational power and expertise in machine learning.

Fortunately, there is an alternative solution that can enhance GPT’s performance without requiring any changes to the model itself: using an external vector database to store your data and letting GPT retrieve relevant data to answer your prompting question. In this step-by-step guide, we will explore how to give GPT infinite external memory using a vector database, unlocking its potential for customised tasks.

This guide is designed for anyone who wants to use GPT to analyze specific data, regardless of their experience with machine learning or database management.

General Idea

Let’s draft the general idea of this project:

- Divide your long text into small chunks that are consumable by GPT

- Store each chunk in the vector database. Each chunk is indexed by a chunk embedding vector

- When asking a question to GPT, convert the question to a question embedding vector first

- Use question embedding vector to search chunks from vector database

- Combine chunks and questions as a prompt, feed it to GPT

- Get the response from GPT

In this tutorial, I will implement a demo project to achieve these steps allowing GPT to access infinite external memory.

In the sample data, I will prepare some customized text files, not seen by GPT before, and input them as the GPT memory. Then, we can ask questions about these large input texts, which was not possible with plain ChatGPT due to the input token limit.

Some Terms

The article is accessible to all readers, regardless of backgrounds in machine learning or database management. If you are already familiar with these topics, feel free to skip this section. However, for those who are new to these concepts, I will provide a brief explanation of key terms to help you understand the content better.

What is embedding?

Embedding is just a vector, a list of numbers with a semantic meaning only understandable by the machine.

This is useful because it allows computers to understand the meanings of words and how they relate to each other, even though they are just a bunch of letters to a computer.

For example, let’s say we have the words “dog” and “cat”. We can represent “dog” as a vector of numbers, and “cat” as another vector. If you compare “dog” and “cat” vectors, they are mathematically close together because they are both common pets.

In our case, when a question is submitted, it is transformed into a question embedding. By comparing the vector representation of the question to the vector representations of the chunks in the database, it is possible to retrieve the most relevant chunks to that question.

What is a vector database?

A vector database is a type of database that stores information as vectors or arrays of numbers. Each piece of information is represented as a vector, where each number in the vector corresponds to a specific attribute or feature of the data.

In this case, you can use a vector as the index to search and retrieve data from the database. The query vector does not need to exactly match the database vectors. The database engine can retrieve the data indexed by close vectors in an efficient way. In our case, it retrieves chunks semantically related to your question.

Design

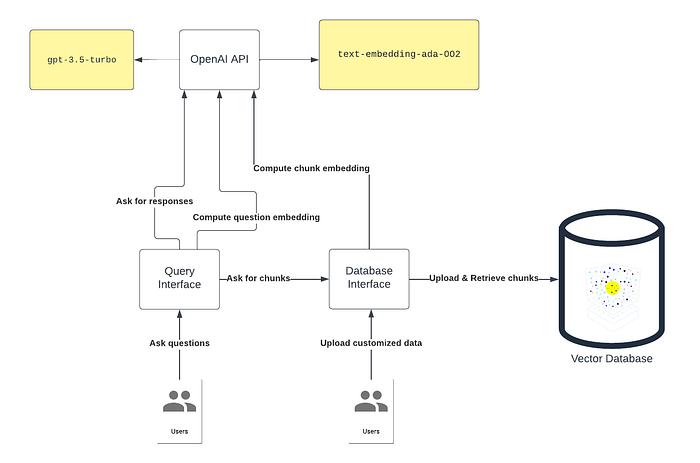

Here is my architecture for this tutorial’s demo. In this tutorial, I will cover how to implement this architecture step-by-step.

As described in the above section, users can do two things:

- Upload external data to the database

- Ask questions about the external data

So two users are present in the diagram.

We use two OpenAI models in this demo:

- gpt-3.5-turbo — your most familiar model: ChatGPT.

- text-embedding-ada-002 — an OpenAI model specifically designed for generating accurate embedding vectors at a very low cost

Query Interface:

- It takes the user’s input question and calls text-embedding-ada-002 to generate an embedding. With this embedding vector, it then queries the Database Interface to search for chunks related to the questions

- It combines retrieved chunks and the user’s original question and query gpt-3.5-turbo (ChatGPT)

- Then it returns ChatGPT’s answer back to users.

Database Interface:

- Search: it takes the user’s question from Query Interface and queries the vector database

- Insert: it takes long raw text from the user, chunks the text into small pieces, converts each piece into an embedding vector, and inserts the <embedding_vector, chunk> pairs into the database.

Vector Database:

- The actual database behind the database interface. In this demo, it is Pinecone Database

Tools

The above design sounds like a complicated project, but we don’t need to reinvent the wheel. Here is a list of tools we need to implement this project.

- OpenAI API —It calls the two models mentioned above

- Pinecone— Vector database

- GPT Retrieval Plugin — A recently released tool from OpenAI. This is our Database Interface handling all chunkings, embedding model calls, and vector database interaction

- FastAPI — Fast web framework for building APIs with Python. This is the built-in framework for GPT Retrieval Plugin

Setup GPT Retrieval Plugin (Database Interface)

GPT Retrieval Plugin handles text chunking, vector database search, and insert. It is a recently released tool from OpenAI. This tool will be run as a FastAPI-backed server on your local machine.

Its GitHub page already covered the config steps. I will cover those steps required in this demo:

Clone the project:

git clone https://github.com/openai/chatgpt-retrieval-plugin.gitNavigate to the cloned repository directory:

cd /path/to/chatgpt-retrieval-pluginInstall poetry:

Python Poetry is a dependency management and packaging tool for Python projects. It is similar to NPM if you are a JS developer.

All required dependencies for this project are specified in the Peotry pyproject.toml file under the GitHub project.

pip install poetryCreate a new virtual environment that uses Python 3.10:

This will make your cmd/terminal run under the virtual environment of poetry and access the project’s dependencies.

poetry env use python3.10

poetry shellThen you run:

poetry installWith poetry, you don’t need to install each dependency by yourself. The above one-line code will install everything required in this virtual environment to run the project.

Then you need to set up some Environment Variables for your shell. Retrieval Plugin will access that information. Replace <…> with your own resource, and run the following scripts in your shell:

export DATASTORE=pinecone

export BEARER_TOKEN=<your_database_interface_api_key>

export OPENAI_API_KEY=<your_openai_api_key>

export PINECONE_API_KEY=<your_pinecone_api_key>

export PINECONE_ENVIRONMENT=<your_pinecone_region_name>

export PINECONE_INDEX=<your_index_name>DATASTOREis the name of your chosen vector database provider. Here I will just use Pinecone. Other vector databases are also supported.

OPENAI_API_KEY is the API key calling OpenAI’s models programmatically Get a key from https://platform.openai.com/

For those PINECONE resources, I will cover how to acquire them in the later section.

BEARER_TOKEN is a security token set for your Database Interface. It is the API key calling your own server. Create any key using https://jwt.io/. On Decoded tab “PayLoad” section:

{

“sub”: “1234567890”,

“name”: “Write any name”,

“iat”: 1516239022

}Change the value to whatever you like. Then go back to Encoded tab and copy that generated token. Paste the key to the above export command. Your server will get this variable when it starts and set this as your security token. Save this token somewhere in a file because you need it later for sending the request.

Pinecone Vector Database Setup

Now we set up a Pinecone Vector Database.

Go to https://www.pinecone.io/, get an account, and log in.

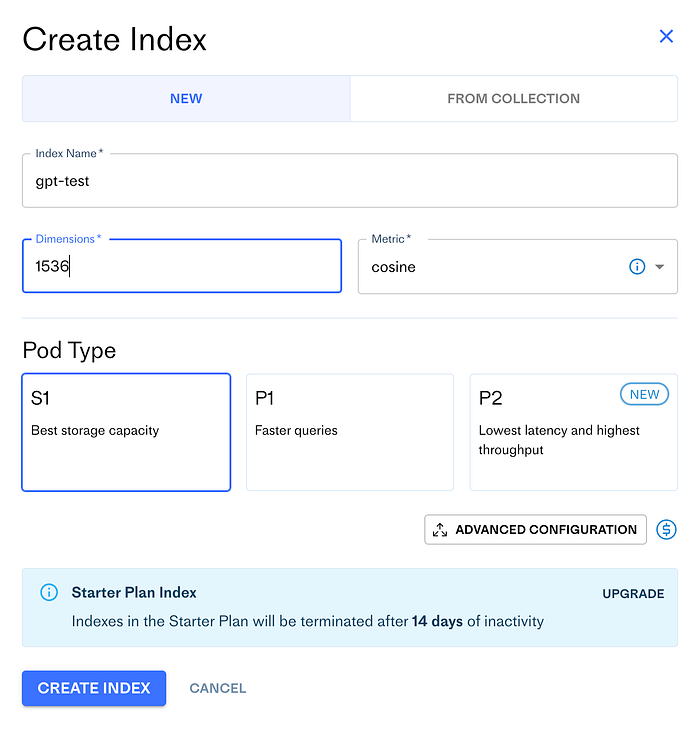

Go to your dashboard, and Click “Create Index”. This will bring you to the page creating your first vector database:

You can follow my setup here. Remember to use 1536 as your dimensions. This dimension should be the same as your embedding model, which is text-embedding-ada-002’s output embedding size.

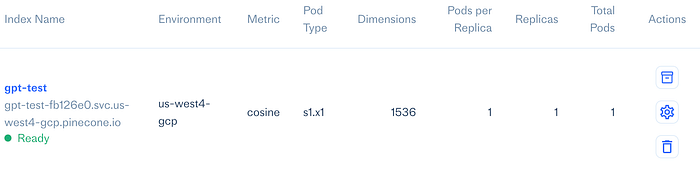

Your dashboard should be then looked like this:

Once your index has a “Ready” notation. You are all set.

Remember previously we have these shell variables to bet set:

export PINECONE_API_KEY=<your_pinecone_api_key>

export PINECONE_ENVIRONMENT=<your_pinecone_region_name>

export PINECONE_INDEX=<your_index_name>Get the PINECONE_API_KEY from the dashboard’s “API KEY”

PINECONE_ENVIRONMENT is “Environment” on your index. In my case it is “us-west4-gcp”.

PINECONE_INDEX is the index name. In the above example, it is gpt-test

Set these up and run them in your command line.

Run Database Interface Server

The config is ready. Under the project directory, run:

poetry run startIt will start your Database Interface server. Open your browser and open http://0.0.0.0:8000/docs#/. If the page is up, you have successfully implemented the Database Interface module with Retrieval Plugin.

Database Interface Helpers

I implemented some helpers for you to upload and query data with the vector database through the Retrieval Plugin.

Open your Python editor (I assume the reader of this article knows Python and have everything about Python setup ready).

Create a file secrets.py to store all of your required api_key:

OPENAI_API_KEY = "<your_openai_api>"

DATABASE_INTERFACE_BEARER_TOKEN = "<your_database_interface_api_key>"Create a file database_utils.py and paste the following code:

from typing import Any, Dict

import requests

import os

from secrets import DATABASE_INTERFACE_BEARER_TOKEN

SEARCH_TOP_K = 3

def upsert_file(directory: str)

"""

Upload all files under a directory to the vector database.

"""

url = "http://0.0.0.0:8000/upsert-file"

headers = {"Authorization": "Bearer " + DATABASE_INTERFACE_BEARER_TOKEN}

files = []

for filename in os.listdir(directory):

if os.path.isfile(os.path.join(directory, filename)):

file_path = os.path.join(directory, filename)

with open(file_path, "rb") as f:

file_content = f.read()

files.append(("file", (filename, file_content, "text/plain")))

response = requests.post(url,

headers=headers,

files=files,

timeout=600)

if response.status_code == 200:

print(filename + " uploaded successfully.")

else:

print(

f"Error: {response.status_code} {response.content} for uploading "

+ filename)

def upsert(id: str, content: str):

"""

Upload one piece of text to the database.

"""

url = "http://0.0.0.0:8000/upsert"

headers = {

"accept": "application/json",

"Content-Type": "application/json",

"Authorization": "Bearer " + DATABASE_INTERFACE_BEARER_TOKEN,

}

data = {

"documents": [{

"id": id,

"text": content,

}]

}

response = requests.post(url, json=data, headers=headers, timeout=600)

if response.status_code == 200:

print("uploaded successfully.")

else:

print(f"Error: {response.status_code} {response.content}")

def query_database(query_prompt: str) -> Dict[str, Any]:

"""

Query vector database to retrieve chunk with user's input question.

"""

url = "http://0.0.0.0:8000/query"

headers = {

"Content-Type": "application/json",

"accept": "application/json",

"Authorization": f"Bearer {DATABASE_INTERFACE_BEARER_TOKEN}",

}

data = {"queries": [{"query": query_prompt, "top_k": SEARCH_TOP_K}]}

response = requests.post(url, json=data, headers=headers, timeout=600)

if response.status_code == 200:

result = response.json()

# process the result

return result

else:

raise ValueError(f"Error: {response.status_code} : {response.content}")

if __name__ == "__main__":

upsert_file("<directory_to_the_sample_data>")It has three functions. Nothing special here. They are all API calls to your Retrieval Plugin FastAPI server. The server will then handle the actual call to the Pinecone database at the back.

You can read the comment doc of each function to have a brief idea about each function.

There are a few parameters you want to set up.

First is the SEARCH_TOP_K in the above file. It determines the number of top-related chunks to retrieve based on the user’s input question. In the example, I use 3, but you can play with this number. A large top-K may give GPT more data to consume, but it may also introduce less relevant result.

Second is the chunking parameter in chatgpt-retrieval-plugin/services/chunks.py of the Retrieval Plugin. You can read what each parameter is doing in the code comment.

My setting is:

# Constants

CHUNK_SIZE = 1024 # The target size of each text chunk in tokens

MIN_CHUNK_SIZE_CHARS = 350 # The minimum size of each text chunk in characters

MIN_CHUNK_LENGTH_TO_EMBED = 5 # Discard chunks shorter than this

EMBEDDINGS_BATCH_SIZE = 128 # The number of embeddings to request at a time

MAX_NUM_CHUNKS = 10000 # The maximum number of chunks to generate from a textThe original CHUNK_SIZE value was set to 200, but during my experiments, I found that this size may break up input text into pieces that are too small. This may break the context of the input text.

Again, feel free to play with these parameters.

Prepare Sample Data

Let’s prepare some sample data and feed it into the database.

You can use my sample data. This is a fantasy world story made up by GPT from scratch. But feel free you use your own sample data. You may take a look at my data and check the format. It is just a list of text files in natural languages.

Next, let’s upload the data to the database with my helper functions. A sample upload run is implemented in database_utils.py. In the file, replace <directory_to_the_sample_data> with the sample data directory.

You can just run:

python database_utils.pyFeel free to modify these helper functions to cater to your own case. It also has a upsert(...) function that allows you to upload plain strings to the database.

If you see some success messages, you are all set:

background uploaded successfully.

cities uploaded successfully.

leaders uploaded successfully.

factions uploaded successfully.

events uploaded successfully.Query Interface

For this part, we will use Python to implement a simple Query Interface that can interact with Database Interface and OpenAI API, and handle the user’s chat and response. I have already written the code for you.

Under the project folder, create a directory called query_interface

Create file chat_utils.py, and paste the following code:

chat_utils.py has functions handling the user’s chat and querying vector database interface as described in the architecture diagram.

Refer back to the diagram if you need a high-level context.

from typing import Any, List, Dict

import openai

import requests

from secrets import DATABASE_INTERFACE_BEAR_TOKEN

from secrets import OPENAI_API_KEY

import logging

def query_database(query_prompt: str) -> Dict[str, Any]:

"""

Query vector database to retrieve chunk with user's input questions.

"""

url = "http://0.0.0.0:8000/query"

headers = {

"Content-Type": "application/json",

"accept": "application/json",

"Authorization": f"Bearer {DATABASE_INTERFACE_BEAR_TOKEN}",

}

data = {"queries": [{"query": query_prompt, "top_k": 5}]}

response = requests.post(url, json=data, headers=headers)

if response.status_code == 200:

result = response.json()

# process the result

return result

else:

raise ValueError(f"Error: {response.status_code} : {response.content}")

def apply_prompt_template(question: str) -> str:

"""

A helper function that applies additional template on user's question.

Prompt engineering could be done here to improve the result. Here I will just use a minimal example.

"""

prompt = f"""

By considering above input from me, answer the question: {question}

"""

return prompt

›

def call_chatgpt_api(user_question: str, chunks: List[str]) -> Dict[str, Any]:

"""

Call chatgpt api with user's question and retrieved chunks.

"""

# Send a request to the GPT-3 API

messages = list(

map(lambda chunk: {

"role": "user",

"content": chunk

}, chunks))

question = apply_prompt_template(user_question)

messages.append({"role": "user", "content": question})

response = openai.ChatCompletion.create(

model="gpt-3.5-turbo",

messages=messages,

max_tokens=1024,

temperature=0.7, # High temperature leads to a more creative response.

)

return response

def ask(user_question: str) -> Dict[str, Any]:

"""

Handle user's questions.

"""

# Get chunks from database.

chunks_response = query_database(user_question)

chunks = []

for result in chunks_response["results"]:

for inner_result in result["results"]:

chunks.append(inner_result["text"])

logging.info("User's questions: %s", user_question)

logging.info("Retrieved chunks: %s", chunks)

response = call_chatgpt_api(user_question, chunks)

logging.info("Response: %s", response)

return response["choices"][0]["message"]["content"]Now, create a main.py and paste the following code:

import logging

import openai

from chat_utils import ask

from secrets import OPENAI_API_KEY

if __name__ == "__main__":

while True:

user_query = input("Enter your question: ")

openai.api_key = OPENAI_API_KEY

logging.basicConfig(level=logging.WARNING,

format="%(asctime)s %(levelname)s %(message)s")

print(ask(user_query))Now let’s test the final result! Make sure your Retrieval Plugin server is running.

Run this main.py file:

python main.pyIt will ask your question prompt.

I wrote:

“Who are some major characters in the world of Auroria and list their stories”

It replies:

“1. Queen Ardenia — She is the queen of Auroria and the ruler of the kingdom. Her story is one of determination and strength, as she fought to reclaim her throne from her treacherous uncle and restore order to the kingdom.

2. Sir Cedric — He is the commander of the Order of the Knights and one of Queen Ardenia’s most trusted advisors. His story is one of loyalty and bravery, as he has led his knights into battle against many powerful enemies and always emerged victorious.

3. Merlyn — He is the leader of the Circle of Wizards and one of the most powerful sorcerers in all of Auroria. His story is one of mystery and intrigue, as he has spent his life studying the arcane arts and using his magic to protect the kingdom from all manner of threats.

4. The Shadow — He is the enigmatic leader of the Thieves’ Guild, and his true identity is known to only a few. His story is one of secrecy and cunning, as he has built a powerful network of thieves and assassins who carry out his bidding in the shadows.

5. Zoltar the Terrible — He is a powerful sorcerer who seeks to conquer the kingdom of Auroria and bend its people to his will. His story is one of villainy and treachery, as he uses his dark magic to sow chaos and destruction wherever he goes.

6. Elara — She is a skilled archer and a member of the Elven race. Her story is one of bravery and determination, as she has fought alongside the Knights and other factions to defend her home and her people from harm.”

Done! GPT now can use external memory to answer this question.

Vector Database is quite scalable and you can input any size of data such as millions of words and let GPT answer related questions.

Discussions

This is a very minimal implementation of external memory for GPT.

For a more complete project, there are more questions that you need to consider. I list some design decisions that you need to investigate.

Chunking Techniques

This simple consideration is the size of your chunk. A small chunk size makes it easy to search for the relevant piece of text, but the small chunk size may make the general contextual information of your text lost.

It also includes considering more complicated chunking methods. For example, overlap chunking. Overlap chunking lets two consecutive chunk shares some overlap tokens. However, you then need to decide on the overlap length. Should it be a fixed number or a percentage? Can you do overlap not on the token level but paragraph level? How to chunk data that is not text-based, e.g. CSV file?

There are lots of decisions you need to think about to build a large system. How to optimize your setting is dependent on your own use case experiments

Utilizing Metadata

In this simple demo, the vector database only stores the embedding vector and the data. However, you can store additional metadata for any chunk. The metadata could include the author of the text, the source of the chunk (e.g. title of the text), the creation time of the text, and the format of the text (e.g. plain text, csv).

Metadata can be used by Vector Database for more advanced search. For example, you can filter the time by only including data from a certain range of time to make retrieval more relevant.

Metadata also allows you to do better chunk processing. For example, you may use metadata to record the position of a chunk in an article. When combining the chunk, you can merge these chunks sequentially based on their position order. This will enhance the contextual information when GPT is consuming your merged prompt

More complicated prompt engineering tricks

In this demo, the prompt is also very simple.

You may use advanced prompt engineering to further enhance your retrieval.

For example, you can use chains of prompts. The first prompt is to annotate metadata first. You can throw your retrieval metadata format to GPT along with the question to ask GPT to fill metadata for you. For example, GPT will analyze your question to tag if the user is asking about data for a certain time range. Then use this metadata to do the retrieval. In the second prompt, you merge all chunks and ask the actual question to GPT.

Different prompt templates can be used for different tasks. You can even index prompt templates in a separate prompt template vector database. Based on the question, you can retrieve the template from the database first, fill the template, and then do the actual retrieval from your text database.

Thank you for reading this guide and I hope you found it valuable, Please mention in Comments.

Comments

Post a Comment

Thank You.